Penn State Unveils FairGNN: Transforming How Social Networks Handle Bias

Social media feeds and friend suggestions often look eerily familiar. Ever notice how those recommended connections seem to know your background—sometimes a little too well? That isn’t magic—it’s machine learning. But there’s a growing concern that these systems, which power recommendations on sites like Facebook and LinkedIn, just reinforce social bubbles. Enter a new approach from researchers at Penn State: FairGNN.

FairGNN is the latest step in fixing how AI handles connections. Traditional graph neural networks (GNNs)—the kind used to map relationships and recommend friends—rely on easy-to-pull data like where you went to school, where you live, or the groups you follow. But here’s the problem: those factors are strongly linked to more sensitive traits, like your gender or the color of your skin. That means GNNs can accidentally help create networks where people with similar sensitive backgrounds stick together, and others get left out.

How FairGNN Works Differently—and Why It Matters

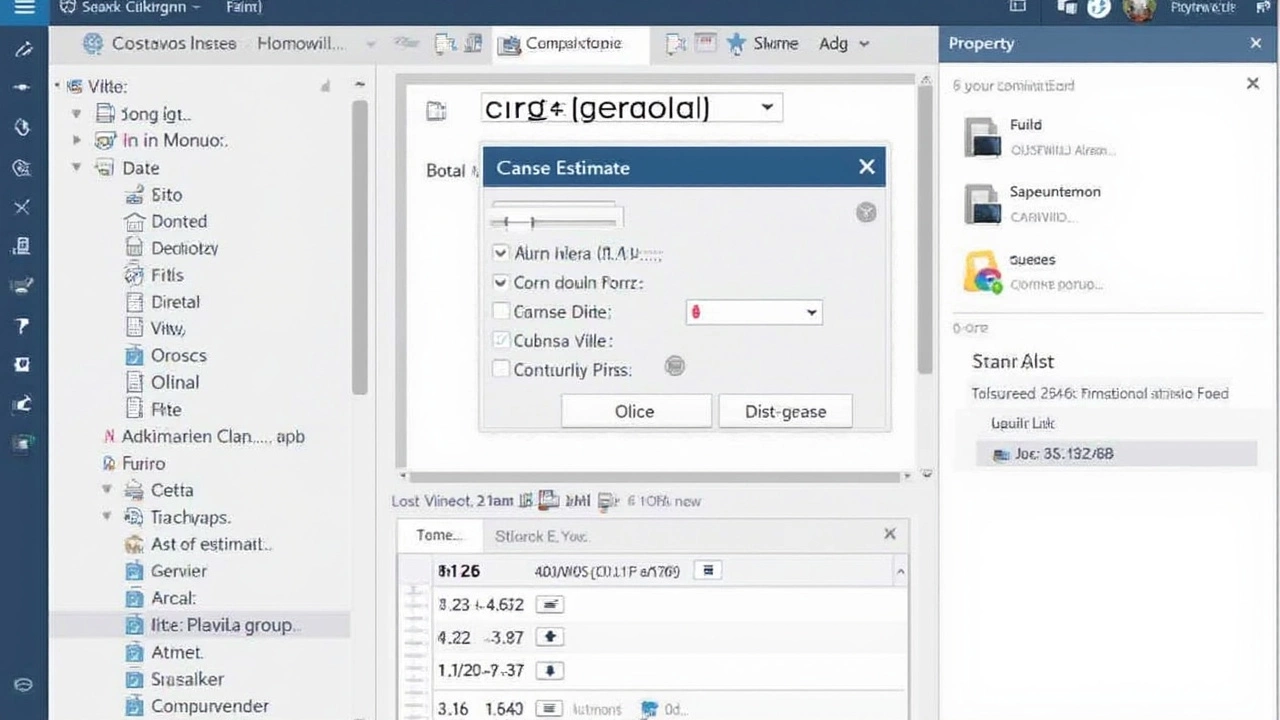

Now, here’s the twist. Most attempts to fix this bias ask users to provide sensitive details up front so the system can specifically correct for them. But who really wants to hand over more private data? FairGNN sidesteps that. Instead of asking people to reveal sensitive info, it uses clues from a handful of disclosed details and patterns in the network itself to estimate these traits behind the scenes. This means FairGNN can spot and minimize bias while keeping users’ sensitive data private.

The magic is in balancing performance and fairness. FairGNN wasn’t just thrown together—it was put through its paces in tough "node classification" jobs, where AI tries to guess something about a person based on their network. In these tests, FairGNN managed to keep results accurate but with much less bias than older models. No major trade-offs or gimmicks—just smarter algorithms that care about who gets recommended to whom.

Lead researcher Enyan Dai explained that the model only needs “minimal” direct information about someone’s sensitive characteristics. The real heavy lifting comes from FairGNN’s ability to infer broader patterns from smaller pieces. That’s a big win for privacy.

The potential uses go beyond just smarter friend suggestions. Recommendation systems shape the jobs we see, the groups we are invited to, and the ideas that fill our feeds. If networks can build more varied connections—mixing backgrounds and breaking up echo chambers—the ripple effects could reach into hiring, education, and even public conversation.

Platforms like Facebook or LinkedIn could take FairGNN and turn it into background code that quietly makes your feed (and your world) a lot less predictable. Instead of always sending you more of the same, they could start surfacing connections you’d never have known about in the old, bias-heavy algorithms.

Right now, FairGNN exists mostly as a research breakthrough, but as these AI fairness fixes catch on, the way we connect online could start to look — and feel — a whole lot different.

Write a comment